What are Autoencoders in Deep Learning?

- Posted by 3.0 University

- Categories Emerging Technology

- Date March 5, 2024

- Comments 0 comment

In deep learning, immerse yourself in the world of autoencoders. Learn how they innovate data compression, feature learning, and anomaly detection in our detailed guide.

Autoencoders, as a principal concept in deep learning, form the foundation of neural network design. Their ability to automatically compress and decompress data programmatically has sparked interest and wonder across various technology sectors.

However, what precisely are autoencoders, and how do they become relevant in the wide area of machine learning?

This article begins an informative journey to elucidate the mystery of autoencoders, detailing their nature, functionality, applications, and the opportunities they present for artificial intelligence.

Introduction to Autoencoders

Fundamentally, autoencoders are an artificial neural network type that learns representations of data in a very efficient manner, unsupervised.

They were created to perform the task of data dimensionality reduction, but their versatility has grown, and they are currently working on issues like denoising and feature learning.

Understanding the Mechanics of Autoencoders

At first, Autoencoders compress the input to encode it into a lower-dimensional space, and subsequently, reconstruct it back to its original form.

They consist of two main parts: the coding side and the decoding side.

The mystery lies in their ability to write down the essential properties of the data during the encoding process, which allows for an almost perfect restore.

Some Facts

Researchers utilize autoencoders and their modified algorithms in anomaly detection, machine translation, and scene segmentation due to their simple training, multi-layer stacking, and strong generalization performance.

Autoencoders underpin the common transformer paradigm.

This study proposes Lightning-Stacked Autoencoder (LCSAE), a deep learning model for LEMP data compression, inspired by this notion.

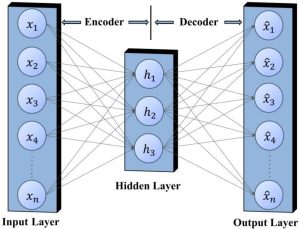

The figure shows a simple autoencoder model with input, output, and hidden layers.

The goal is to minimize the disparity between input x and output 𝑥̂ by codifying the intermediate hidden layer h.

To reduce dimensionality, decrease the feature value for h.

(Image Courtesy: MDPI.)

The Mathematical Foundation

Researchers typically evaluate an autoencoder based on its capacity to minimize the difference between the original input and its reconstruction, often using loss functions such as mean square error.

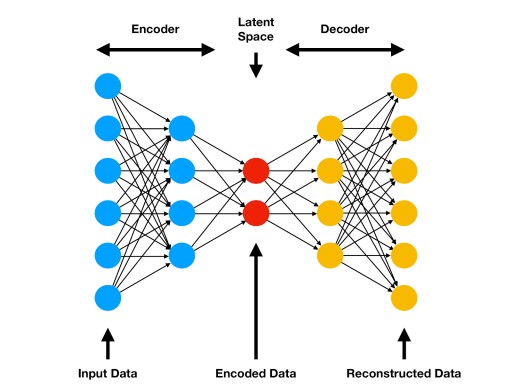

Latent space is the core of an autoencoder, representing compressed knowledge of the input data.

Tishby et al. (1999) introduced the information bottleneck.

Introduced with a hypothesis to extract meaningful information by compressing the amount of data that can pass through the network, resulting in learning input data compression.

(Image Courtesy: Neptune Ai.)

This reduced version reduces dimensions and data complexity [Deep Learning and the Information Bottleneck Principle, Tishby 2015].

Imagine a network that eliminates irrelevant data and focuses on key aspects connected to broad notions.

Autoencoder Applications in Deep Learning

Autoencoders can perform a whole range of tasks, including improving image quality and identifying irregularities in data patterns.

They contribute a lot to feature learning, which gives machines the ability to identify significant patterns in the data by themselves.

Researching the AI Lexicon AI acknowledges autoencoders for their unsupervised data representation methods, crucial for data denoising, dimensionality reduction, and anomaly detection.

Understanding Autoencoder Functions:

Autoencoders generate a compact data representation for dimensionality reduction and reconstitute the original data.

This method highlights the most significant information and eliminates the rest.

Explore the complexities of autoencoder encoding and decoding.

The key to encoding is compressing input data into smaller dimensions.

Decoding employs encoding characteristics to recreate the original data.

Understanding Autoencoder Origins:

Autoencoders originated in artificial neural networks. Advances in unsupervised learning, as well as the need for appropriate data representation, have increased their prominence.

Autoencoder Progress: On the face of it, Since AI has progressed, it’s evident that autoencoders have become more versatile, and of course, equally important in unsupervised learning, feature extraction, and generative modeling.

Autoencoder Evolution Highlights: Interestingly, Autoencoders have expanded their capabilities in artificial intelligence with the ingenious invention of variational autoencoders (VAEs) and generative adversarial networks (GANs).

Autoencoders Revolutionize AI: Autoencoders empower AI by learning important data representations autonomously, enabling computer vision, natural language processing, and anomaly detection.

Innovations and Discoveries: Autoencoders aid Advanced unsupervised learning and feature extraction have relied on autoencoders to represent and manipulate complicated data for AI applications.

Automatic Encoders – AI Innovations: Unsupervised feature learning and realistic data production make autoencoders vital for building new AI solutions and advancing image recognition, language modeling, and recommendation systems.

Applications of Autoencoders (AE)

Conventional inspection methods manually identify flaws and deviations.

Modern approaches use anomaly detection algorithms since hand checks are time-consuming, demanding, and error prone.

Deep neural networks from machine learning and deep learning are intriguing for discovering complicated correlations in large datasets.

Training a deep neural network with normal and abnormal samples enables capturing fine-grained patterns in high-dimensional visual characteristics to differentiate defective from non-defective items.

This idea, also known as supervised learning in technology, has produced excellent commercial results.

AEs excel at understanding complex data patterns and finding subtle abnormalities, making them popular anomaly detectors.

Fraud detection and other highly unbalanced, supervised jobs benefit from anomaly detection models.

AEs work in supervised, unsupervised, and semi-supervised anomaly detection.

Building an Autoencoder

Most definitely, the development of an autoencoder is a process of finding a good trade-off between the complexity of the model and the specificity of the dataset.

As a minimalist guide, this section will help you choose the appropriate framework, and help you with the steps to build your customized autoencoder model to meet your specific requirements.

Autoencoder neural networks reproduce their input as output.

Consider this scenario: An autoencoder compresses a handwritten digit image into a reduced-dimensional latent representation, then reconstructs it into an image.

An autoencoder learns to compress data effectively by lowering reconstruction errors.

The Core: Challenges and Solutions

Specifically, Autoencoders are very potent, even though there are some difficulties in getting them to work effectively, such as overfitting and creating meaningful representations of data.

These strategies are critical for maximizing the efficiency of an autoencoder.

Autoencoders have many uses in deep learning, but they face several restrictions that might impair their performance and efficiency.

Here, we examine eight autoencoder challenges:

1.Choosing an Architecture

Finding the right autoencoder structure is crucial yet tricky. The design should be complex to cover critical data points without overfitting.

2.Hyperparameter Tuning

It takes talent and trial and error to fine-tune hyperparameters like layers, neurons per layer, and learning rate.

3.Narrow bottleneck layer

A thin bottleneck layer may cause the autoencoder to overlook essential information, resulting in poor input data reconstruction.

4.Overfitting

Autoencoders may overfit high-dimensional data or complicated network designs relative to training data.

5.Intensive computation

Training autoencoders, particularly deep ones, requires a lot of computation and time for huge datasets.

6.Data Variability Capture Challenge

Autoencoders may struggle to capture variability in complex data, especially noisy or irrelevant input.

7.Quality of Input Data is Monitored

Autoencoder performance depends on high-quality input data. Be careful that noise, outliers, and missing data may negatively affect learning and representation quality.

8.Generalization of New Data

Finally, autoencoders may not generalize well to new data.

This constraint might be difficult to overcome when the model must thrive with data that differs considerably from the training sample. Deep learning autoencoders are adaptable and robust technologies used in many domains, despite their disadvantages.

They are efficient and adaptable for dimensionality reduction, picture compression, and anomaly detection using autoencoders. Thus, understanding autoencoders and answering questions like whether they are CNNs requires more study. This is a wonderful starting point for AI and machine learning research.

To suggest – Emeritus’ artificial intelligence and machine learning courses

The Future of Autoencoders

Technological advancements in artificial intelligence and machine learning drive the development of autoencoders.

The future seems bright, with applications in areas beyond our current imagination.

Autoencoders in Real-World Scenarios

Various sectors in the real world utilize anonymizers, from healthcare for medical image analysis to finance for fraud detection.

Such ability and productivity have been priceless in dealing with complex real-world problems.

Autoencoders vs. Other Neural Networks

Awareness of the subtleties that distinguish autoencoders from other neural networks, like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), is important for choosing the appropriate model for your project.

Getting Started with Autoencoders

Many resources, communities, and forums can help you get started in the world of autoencoders if you are eager to immerse yourself.

Summing it up to brevity…

Autoencoders are the personification of the union of simplicity and complexity in the context of machine learning.

They provide insight into the ability of neural networks to comprehend rather than only learn the data.

You may also like

How to Become An Enterprise Architect?

Steps to Becoming an Enterprise Architect: Education, Skills, and Certifications In this guide, discover how to become an Enterprise Architect (EA). This guide covers the essential skills, professional certifications, career tracks, and industry insights necessary for you to be a …

Highest Paying Jobs in India 2024

Which sectors are promising lucrative careers and salaries? 2024 is leading the Indian job market into an era of radical transformation, driven by technological advancements, economic reforms, and global connectivity. What can be more paramount for us today? What lies …

Evolution Of Web3, Metaverse, NFT & Blockchain Technology

The digital space is going through a radical shift owing to the advent of Web3 and the age of the Metaverse. This evolution is an era change from the traditional centralized internet (Web2) to a decentralized and interactive digital environment. …