Foundational Concepts of Machine Learning

- Posted by 3.0 University

- Categories Artificial Intelligence

- Date March 21, 2024

- Comments 0 comment

Embark on a journey through the fascinating world of machine learning algorithms, where we demystify the key players from Linear Regression to Neural Networks and unveil the magic behind how machines learn from data to make predictions and decisions.

Foundational Machine Learning Algorithms

Linear Regression

Linear regression is the simplest of all algorithms and is used for predicting numeric values where input and output variables are linearly related.

“An Introduction to Statistical Learning” by James, Witten, Hastie, and Tibshirani provides a comprehensive exploration of linear regression and its practical applications.The authors propose that it is beneficial for predicting quantitative responses. Linear regression has been a topic extensively covered in textbooks for many years.

Linear regression remains a widely used statistical learning technique, despite its potential to seem less exciting when compared to the more contemporary methods.

Moreover, we will explore various advanced statistical learning techniques that build upon and expand the principles of linear regression.

This makes linear regression an excellent foundation to begin our journey. Therefore, it is essential to have a strong grasp of linear regression before delving into more advanced learning methods.

Logistic Regression

Although the name suggests it, logistic regression is used for classification tasks, not for regression. It calculates the probability of a given input from one category.

In a publication by D.W. Hosmer Jr., S. Lemeshow, and R.X. Sturdivant (2013), “Applied Logistic Regression,” the crucial parts of the subject are made easy for understanding logistic regression classification.

Applied Logistic Regression explores various applications in the field of health sciences and focuses on selecting themes that align with modern statistical tools.

The book explores the latest techniques for creating, interpreting, and evaluating LR models. The more exciting augmentations are:

- An exploration of associated outcome data analysis.

- Provides additional insights on Bayesian approaches and model fit.

- Presenting comprehensive data from real-world studies to illustrate each approach.

- Providing a comprehensive overview of findings and exercises.

Decision Trees

These algorithms use the tree-like model of the decisions and possible consequences, including chance event outcomes, resource costs, and utility.

Here’s another publication – Friedman, J., Stone, C.J., & Olshen, R.A. (1984) work titled -“Classification and Regression Trees.” This essential work discusses decision trees.

Understanding Decision Tree Induction

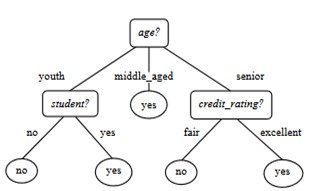

Decision Tree induction uses labeled training tuples. It is structured like a flowchart, with nodes representing attribute tests, branches representing test outcomes, and leaf nodes representing class labels.

The root node is the most prominent tree node.

Here is a standard decision tree.

The tree demonstrates the concept of computers by predicting whether ‘all-electronics’ customers will make a purchase.

Ovals represent leaf nodes, while rectangles depict internal nodes. Some decision tree methods can result in trees that are not strictly binary, while others only generate binary trees.

The book explores important points, such as:

- Decision trees are utilized for the purpose of classifying unknown tuples (X) through the evaluation of their attribute values against the tree.

- A path extends from the starting point to a final node containing the predicted class of the tuple.

- Decision trees make it easy to create classification rules.

What makes decision tree classifiers so popular?

Decision tree classifiers are ideal for exploratory knowledge discovery as they require no domain expertise or parameter configuration.

Decision trees are capable of handling data with multiple dimensions. Their knowledge representation using trees is clear and accessible.

Decision tree induction offers a straightforward and efficient approach to learning and categorizing data. Generally speaking, decision tree classifiers tend to be quite accurate.

Data is crucial for achieving success.

Various fields such as medicine, industry, financial analysis, astronomy, and molecular biology widely use decision tree induction techniques for categorization purposes. Decision trees are fundamental to many rule-induction systems used in the business world.

Support Vector Machines (SVM)

SVMs are very strong classification algorithms applied to both linear and non-linear data.

They function by identifying the most appropriate border that divides data into classes. Cortes and Vapnik discuss the concept of support-vector networks in the field of machine learning in their 1995 paper, “Support-Vector Networks.”

This publication in Machine Learning offers significant insights into this topic. The paper is crucial for gaining a solid understanding of SVMs. Support vector networks are cutting-edge machines for learning and categorizing two groups.

The machine transfers input vectors to a high-dimensional feature space in a non-linear manner. A linear decision surface is constructed in this feature space.

The distinctive characteristics of the decision surface contribute to the learning system’s ability to make generalizations. Earlier iterations of the support-vector network were specifically developed to separate training data without any errors.

With the right input modifications, support vector networks can achieve excellent generalization. The support vector network is also compared to traditional learning methods in an Optical Character Recognition (OCR) benchmark study.

K-Nearest Neighbours (KNN)

kNN is a straightforward, instance-based learning technique where the class of a sample is found by choosing the majority class among its k nearest neighbours.

The kNN algorithm is widely recognized for its exceptional pattern recognition capabilities.

Ensemble approaches utilizing kNN techniques effectively handle outliers by carefully selecting a cluster of data points in the feature space that are close to an unobserved observation.

These selected points then contribute to a collective decision-making process known as majority voting to predict the response of the unobserved observation.

Typical ensembles utilizing kNN identify the k nearest observations within a bounded area based on a specified k value. When the test observation follows the pattern of the nearest data points with the same class on a route beyond the allotted sphere, this scenario may not work as expected.

In this study, the discovery of the k closest neighbours occurs in k stages. The method locates the closest observation to the test location, starting from the initial nearest observation.

For every ensemble base learner, this search is expanded to k steps on a random bootstrap sample with a random feature subset from the feature space. The final predicted class of the test point is determined through a majority vote among the predicted classes of all base models. This novel ensemble technique has been evaluated on 20 benchmark datasets, comparing it to conventional methods such as kNN-based models.

The evaluation measures used include classification accuracy, kappa, and Brier score. Boxplots illustrate the comparison between the suggested approach and other state-of-the-art methods. In many instances, the suggested technique proved to be more effective than traditional methods.

An extensive simulation analysis is conducted to evaluate the proposed strategy.

Summarising it….

Machine learning algorithms have changed the way we see the world because they make our technologies much smarter.

This ultimate guide covers the various types of machine learning algorithms, their uses, and how to select the right one for your requirements.

Regardless of whether you are starting or want to strengthen your skills, the domain of machine learning has got you covered with an endless supply of exploration and innovation.

Await our next article that will encompass the areas like Advance Machine Learning Algorithms, Neural Networks, Deep Learning: CNNs & RNNS, GANs, and a lot more.

You may also like

Is AI in High Demand?

Imagine what people are starting to ask themselves – Is AI in high demand in today’s fast-paced digital world? Indeed, very relevant! Isn’t it? Let’s get into the thick of this exciting topic; find out why artificial intelligence has become …

Innovative Approaches to Diminish AI BIAS

Explore the frontier of AI fairness with pioneering methods designed to effectively diminish biases and promote equity across AI systems. In this piece, we shall delve in to the various aspects and strategies of minimizing biases in AI. Mitigating AI …

What is BIAS in Artificial Intelligence?

Unveiling Bias in AI: Challenges and Strategies For Fairer Systems Intriguingly, as artificial intelligence continues to reshape industries, essentially, it as much ensures its fairness is crucial. This article delves into the pervasive issue of bias within AI systems, exploring …